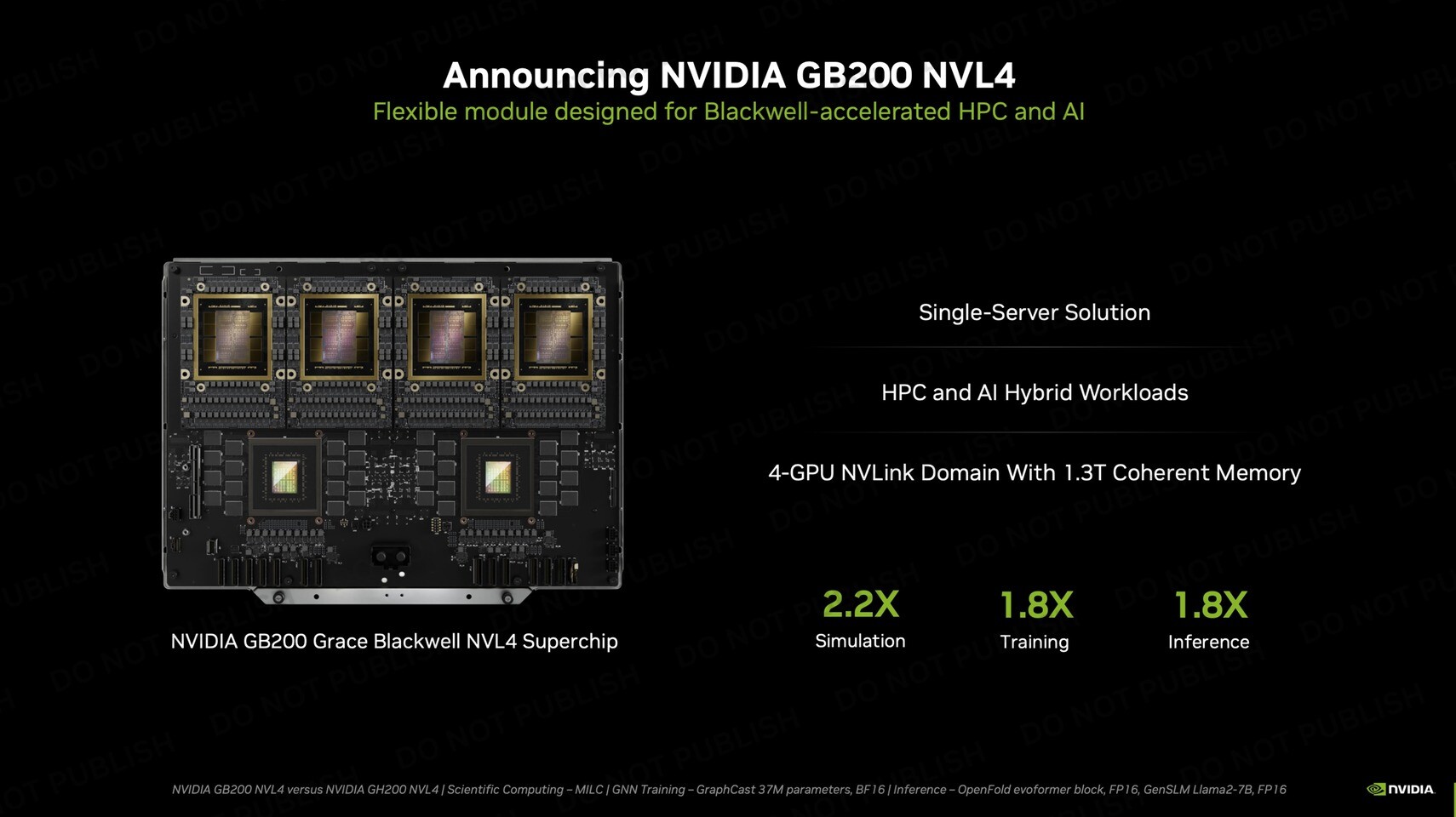

NVIDIA Unveils Latest Compute-Dense AI Accelerators at SC24

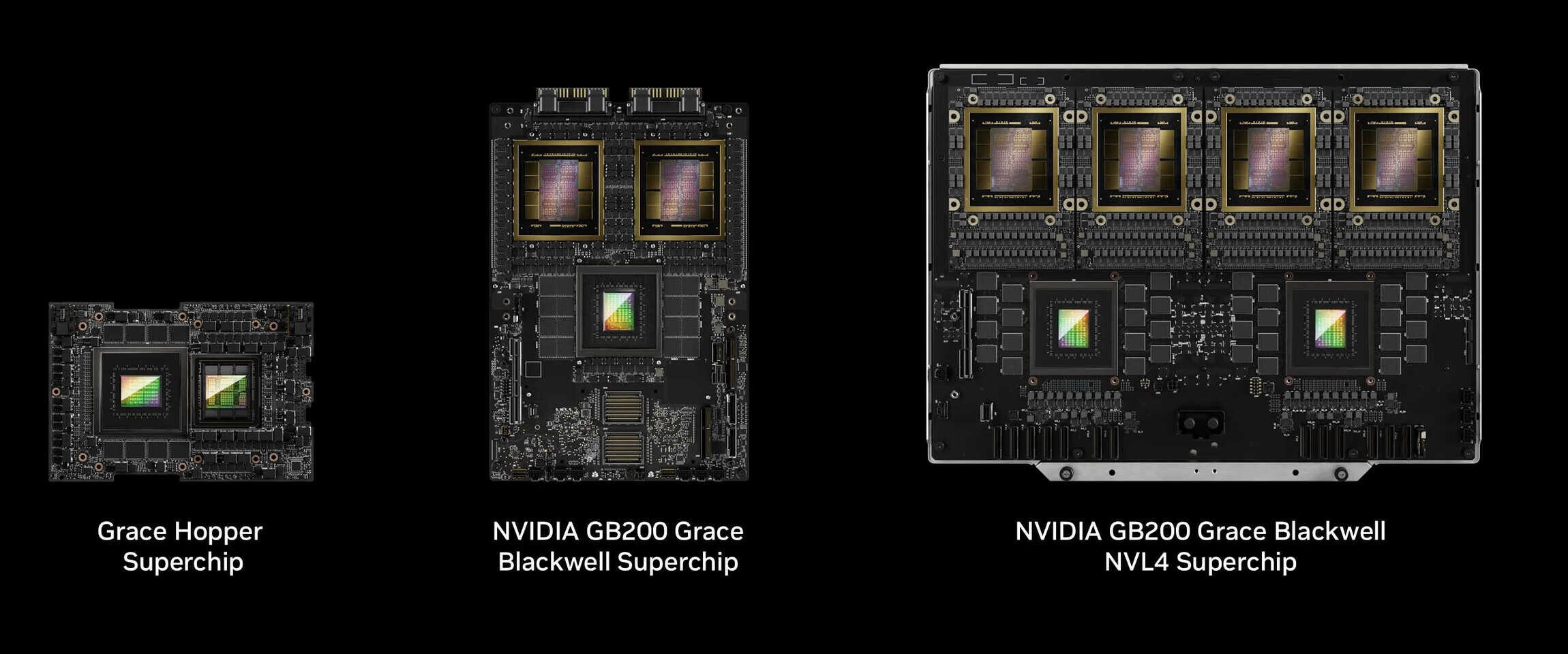

During SC24, NVIDIA introduced its newest compute-dense AI accelerators with the launch of the GB200 NVL4. This single-server solution expands the company's "Blackwell" series portfolio. The GB200 NVL4 features an impressive combination of four "Blackwell" GPUs and two "Grace" CPUs on a single board. With 768 GB of HBM3E memory across its four Blackwell GPUs, the system delivers a combined memory bandwidth of 32 TB/s. The two Grace CPUs come with 960 GB of LPDDR5X memory, making the GB200 NVL4 a powerhouse for demanding AI workloads. The NVL4 design includes NVLink interconnect technology, enabling seamless communication between all processors on the board to maintain optimal performance during large training runs or inferencing a multi-trillion parameter model.

Performance comparisons with previous generations show significant improvements, with NVIDIA claiming the GB200 GPUs offer 2.2x faster overall performance and 1.8x quicker training capabilities compared to their GH200 NVL4 predecessor. The system's power consumption reaches 5,400 watts, doubling the requirement of the GB200 NVL2 model, which features two GPUs instead of four. NVIDIA is collaborating with OEM partners to bring various Blackwell solutions to market, including the DGX B200, GB200 Grace Blackwell Superchip, GB200 Grace Blackwell NVL2, GB200 Grace Blackwell NVL4, and GB200 Grace Blackwell NVL72. To accommodate the 5,400 W of TDP in a single server, liquid cooling is necessary for optimal performance. The GB200 NVL4 is expected to be installed in server racks for hyperscaler customers, who typically have custom liquid cooling systems in their data centers.