NVIDIA Hopper Architecture Transforms AI and HPC Landscape

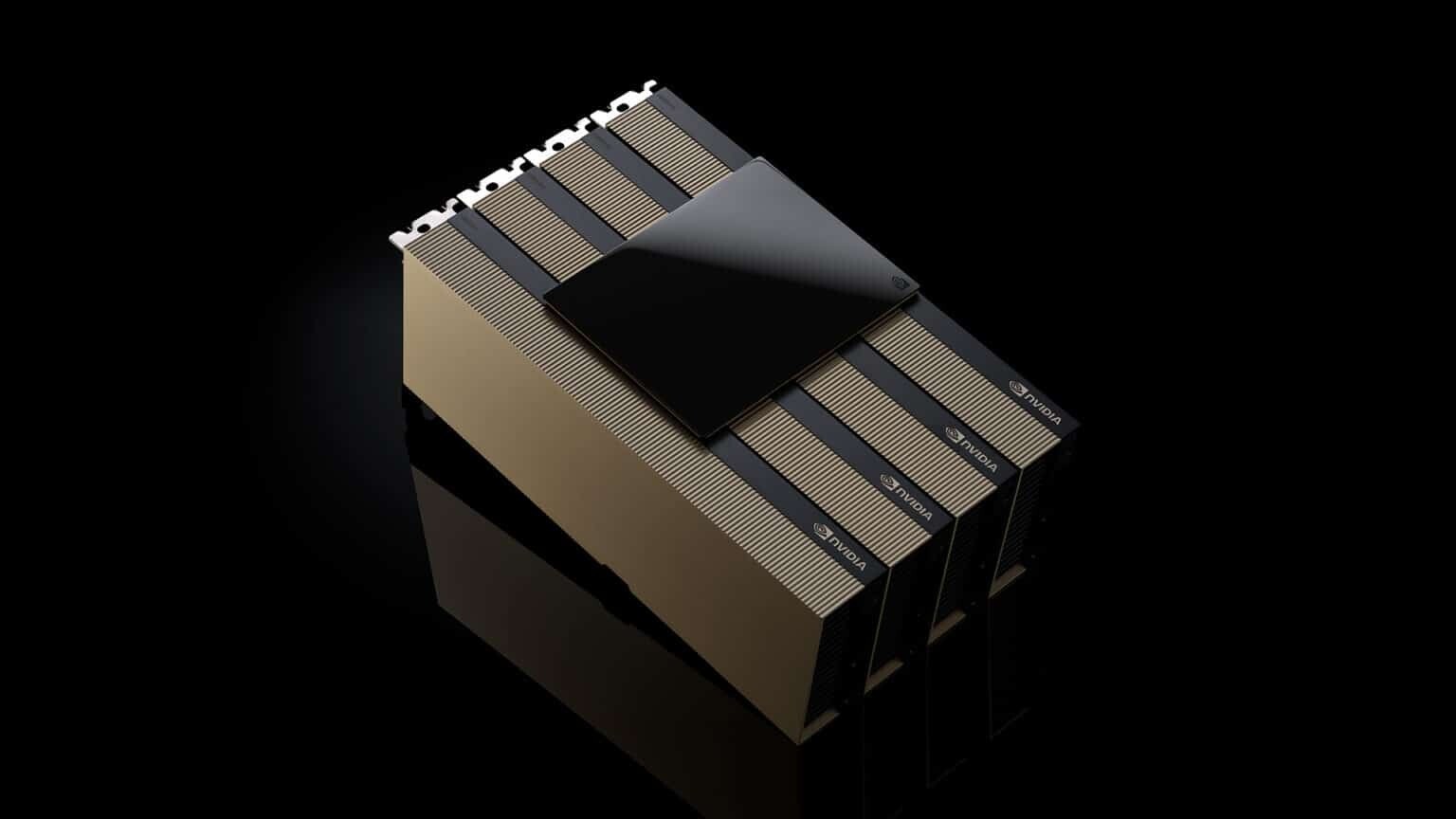

Since its inception, the NVIDIA Hopper architecture has revolutionized the AI and high-performance computing (HPC) industry, empowering enterprises, researchers, and developers to address the most complex challenges with increased performance and energy efficiency. At the Supercomputing 2024 conference, NVIDIA unveiled the NVIDIA H200 NVL PCIe GPU as the newest member of the Hopper family. The H200 NVL is designed for organizations with data centers seeking lower-power, air-cooled enterprise rack designs with customizable configurations to accelerate AI and HPC workloads of any size.

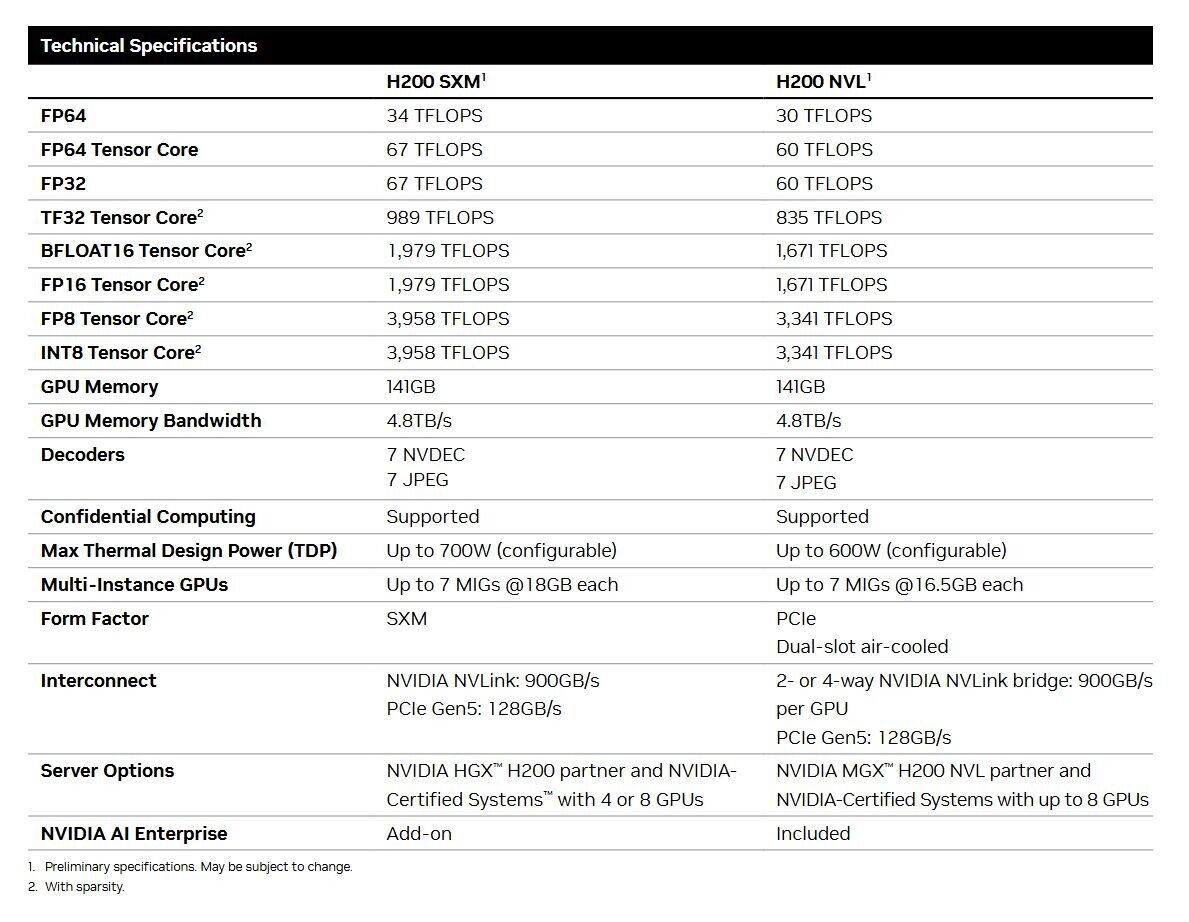

Approximately 70% of enterprise racks utilize air cooling and are 20kW and below, making PCIe GPUs crucial for node deployment flexibility. The H200 NVL allows data centers to maximize computing power in limited spaces by offering one, two, four, or eight GPU options. Companies can utilize their existing racks and choose the number of GPUs that best fit their requirements. The H200 NVL enhances energy efficiency by reducing power consumption and provides a 1.5x memory increase and 1.2x bandwidth increase compared to the NVIDIA H100 NVL. This enables companies to fine-tune LLMs quickly and achieve up to 1.7x faster inference performance. For HPC workloads, the H200 NVL delivers a 1.3x performance boost over the H100 NVL and a 2.5x improvement over the NVIDIA Ampere architecture generation. The NVIDIA NVLink technology further enhances performance by enabling GPU-to-GPU communication 7x faster than fifth-generation PCIe.

The H200 NVL is complemented by powerful software tools that help enterprises accelerate applications from AI to HPC. It includes a five-year subscription for NVIDIA AI Enterprise, a cloud-native software platform for AI model development and deployment. NVIDIA AI Enterprise features NVIDIA NIM microservices for secure and reliable high-performance AI model inference deployment.

Companies Leveraging the Power of H200 NVL

Various industries are benefiting from the H200 NVL for AI and HPC applications. Dropbox is utilizing NVIDIA accelerated computing to enhance its services and infrastructure, while the University of New Mexico is leveraging NVIDIA accelerated computing for research and academic purposes.

Leading technology companies such as Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro are expected to offer a range of configurations supporting the H200 NVL. Additionally, platforms from Aivres, ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, MSI, Pegatron, QCT, Wistron, and Wiwynn will feature the H200 NVL.

Systems based on the NVIDIA MGX modular architecture enable computer manufacturers to efficiently build diverse data center infrastructure designs. The H200 NVL will be available from NVIDIA's global systems partners starting in December, with an Enterprise Reference Architecture in development to guide partners and customers in designing and deploying high-performance AI infrastructure based on the H200 NVL.