AMD Updates AI Accelerator Portfolio with Instinct MI325X

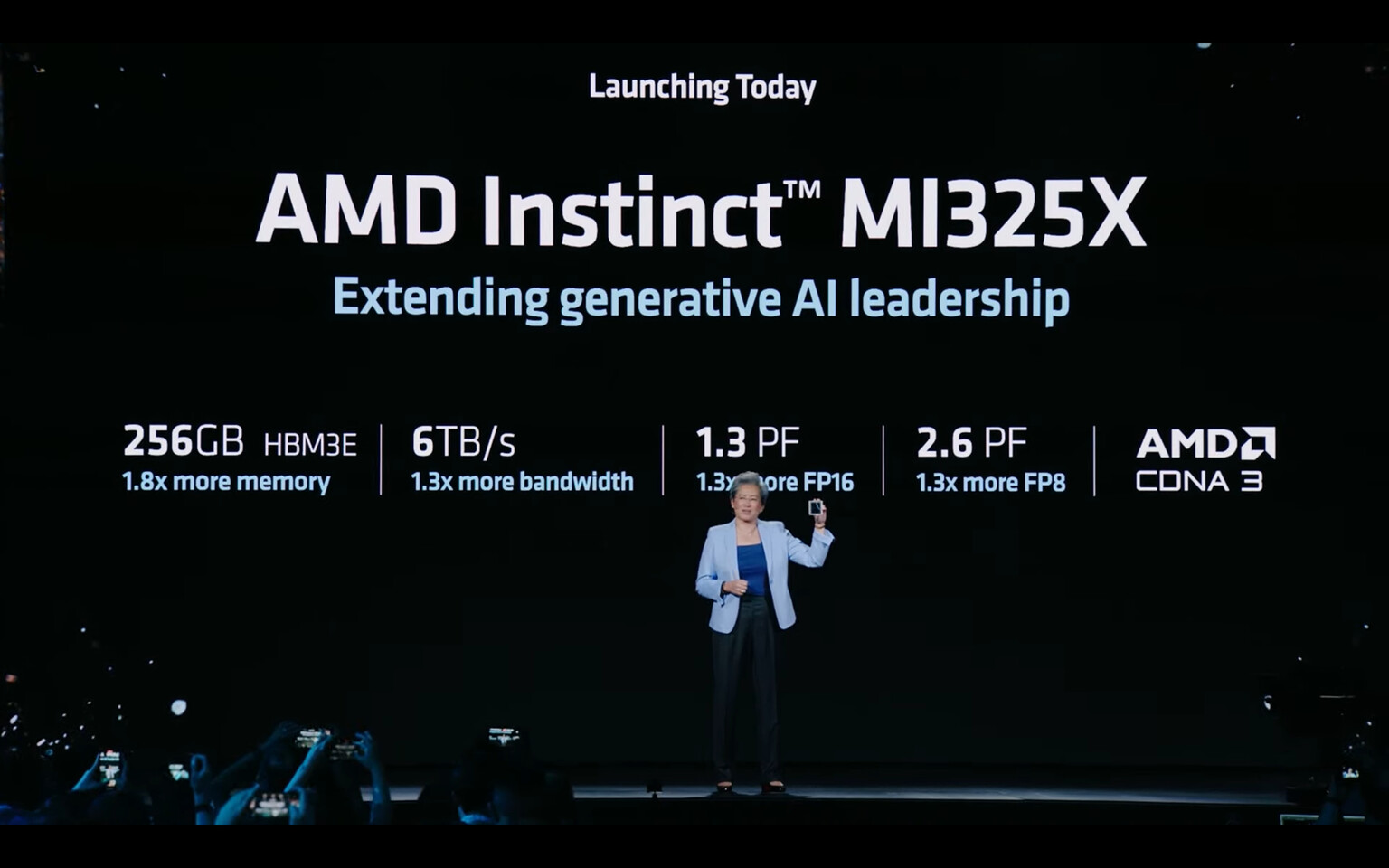

During its "Advancing AI" conference today, AMD announced the launch of the Instinct MI325X accelerator, which is designed to succeed its MI300X predecessor. Built on the CDNA 3 architecture, the MI325X brings a range of improvements over the previous SKU. The new accelerator features 256 GB of HBM3E memory running at 6 TB/s bandwidth, a significant upgrade from the 192 GB of regular HBM3 memory in the MI300 SKU. This increase in memory capacity is essential as AI workloads continue to grow in complexity.

In terms of compute resources, the Instinct MI325X offers 1.3 PetaFLOPS at FP16 and 2.6 PetaFLOPS at FP8 training and inference, representing a 1.3x improvement over the MI300. The MI325X OAM modules are designed to be a drop-in replacement for the current platform, making it easy to upgrade from the MI300X.

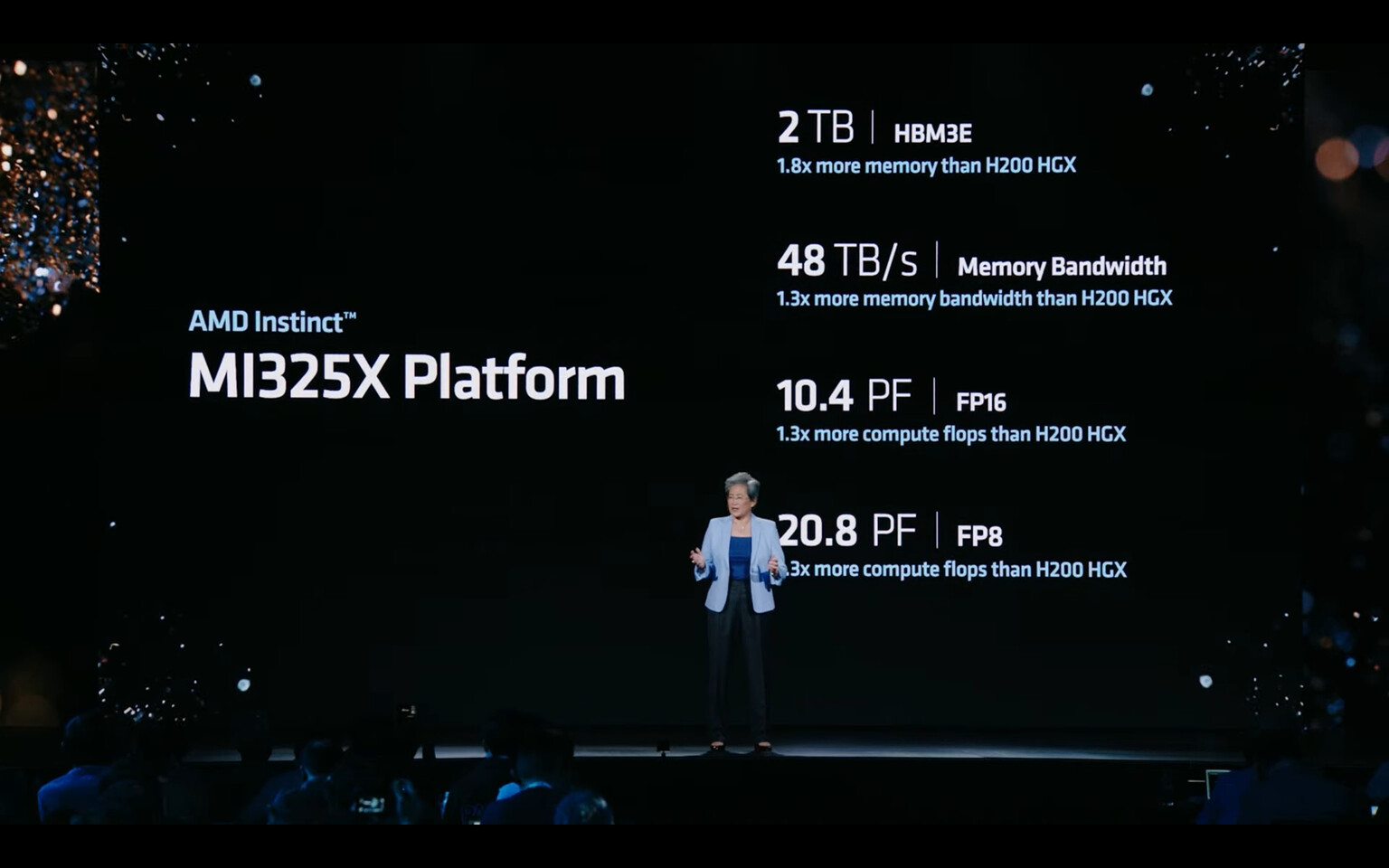

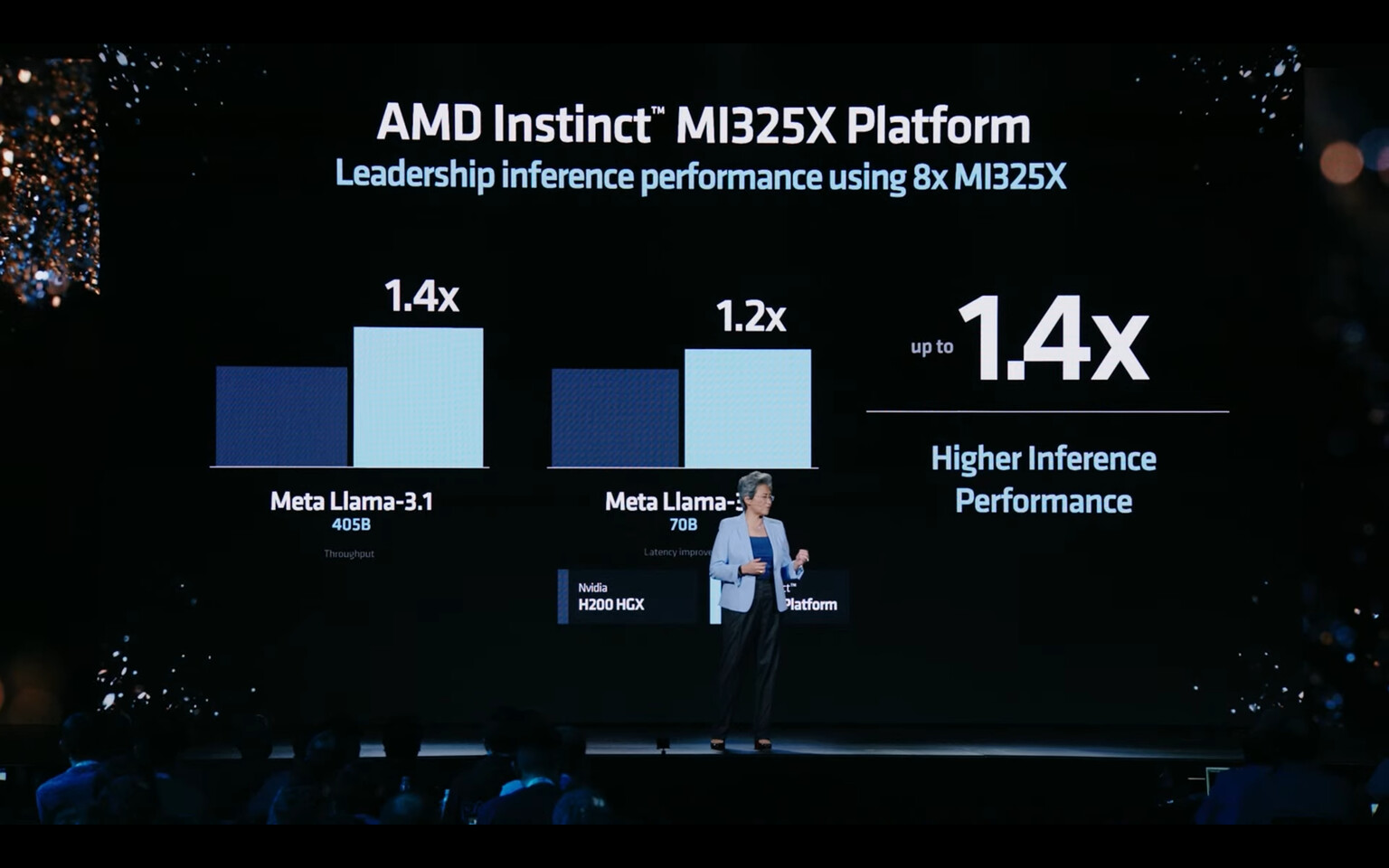

Systems with eight MI325X accelerators can achieve 10.4 PetaFLOPS of FP16 and 20.8 PetaFLOPS of FP8 compute performance, with 2 TB of HBM3E memory running at 48 TB/s memory bandwidth. AMD claims that the Instinct MI325X outperforms NVIDIA's H200 HGX system in memory bandwidth, compute performance, and memory capacity.

At the heart of the accelerator is the ROCm software stack, which is being integrated with the latest features from frameworks like PyTorch, Triton, and ONNX. AMD also announced plans for the Instinct MI350X family in the second half of 2025, featuring the CDNA 4 architecture and support for lower-level data types like FP4 and FP6. The upcoming Instinct MI355X accelerator will offer 288 GB of HBM3E memory and deliver 2.3 PetaFLOPS of FP16 and 4.6 PetaFLOPS of FP8 compute capability.